Improve Security Of Your AWS Account

by Valts Ausmanis · June 26, 2024

Nowadays, with many AI productivity tools available like GitHub Copilot, AWS CodeWhisperer, and ChatGPT, you can get things done much faster compared to the old days when you had to spend a lot of time googling and searching the web for the right answers and solutions. This definitely helps speed up the development and deployment of new products and features. However, this also introduces new challenges. We tend to not think too much about the underlying details and security-related configurations of our AWS accounts and services. We somehow trust that the default security settings will work for our use case. You would be surprised how many times, when I have done security checks requested by our customers, I have found out that their AWS accounts and services don’t have proper foundational AWS security controls applied. That’s why I suggest anyone who works with AWS cloud to spend a couple of minutes reading this list of ways to improve the security of your AWS accounts.

In This Article

- Foundational AWS Security Controls That Should Be Always Enforced

- Enforce a Password Policy Requiring Users to Have Strong Passwords

- Enable Mandatory Multi-factor Authentication (MFA) for Users to Log In

- Rotate Old Credentials

- Use Temporary Credentials to Grant Access to AWS accounts

- Restrict the Use of the Root User

- Set Valid Email Addresses as Contacts to Receive Communication From AWS

- Create Least-Privilege IAM Policies

- Prevent Public Access to Private S3 Buckets

- Create Billing Alarms

- Delete Unused VPCs, Subnets, and Security Groups

- Configure Stricter VPC Security Groups for Both Public and Private Traffic

- Utilize AWS Native Security Services

- Additional Ways to Improve Security of Your AWS Account

- Start Using AWS IAM Identity Center and AWS Organizations

- Limit IAM Permissions for Development Users

- Configure the AWS CLI to Use IAM Identity Center Credentials

- Always Verify Default Settings Set by Your IaC Framework

- Limit IAM Permissions for IaC Deployment Roles

- Avoid Overly Descriptive Default API Gateway Response Messages

- Use Security Response Headers for Your CloudFront Distribution

- Override the “Server” Response Header for Your CloudFront Distribution

- Summary

Foundational AWS Security Controls That Should Be Always Enforced

There are many ways how to improve security of your AWS account and several of these are based on actual workloads or applications (like use security response headers for your CloudFront distribution) that you have deployed in AWS cloud. However, there are foundational AWS security controls that should always be enforced for any AWS account.

From my experience, there is a good chance that at least one of the foundational AWS security controls listed below is not applied to your AWS account.

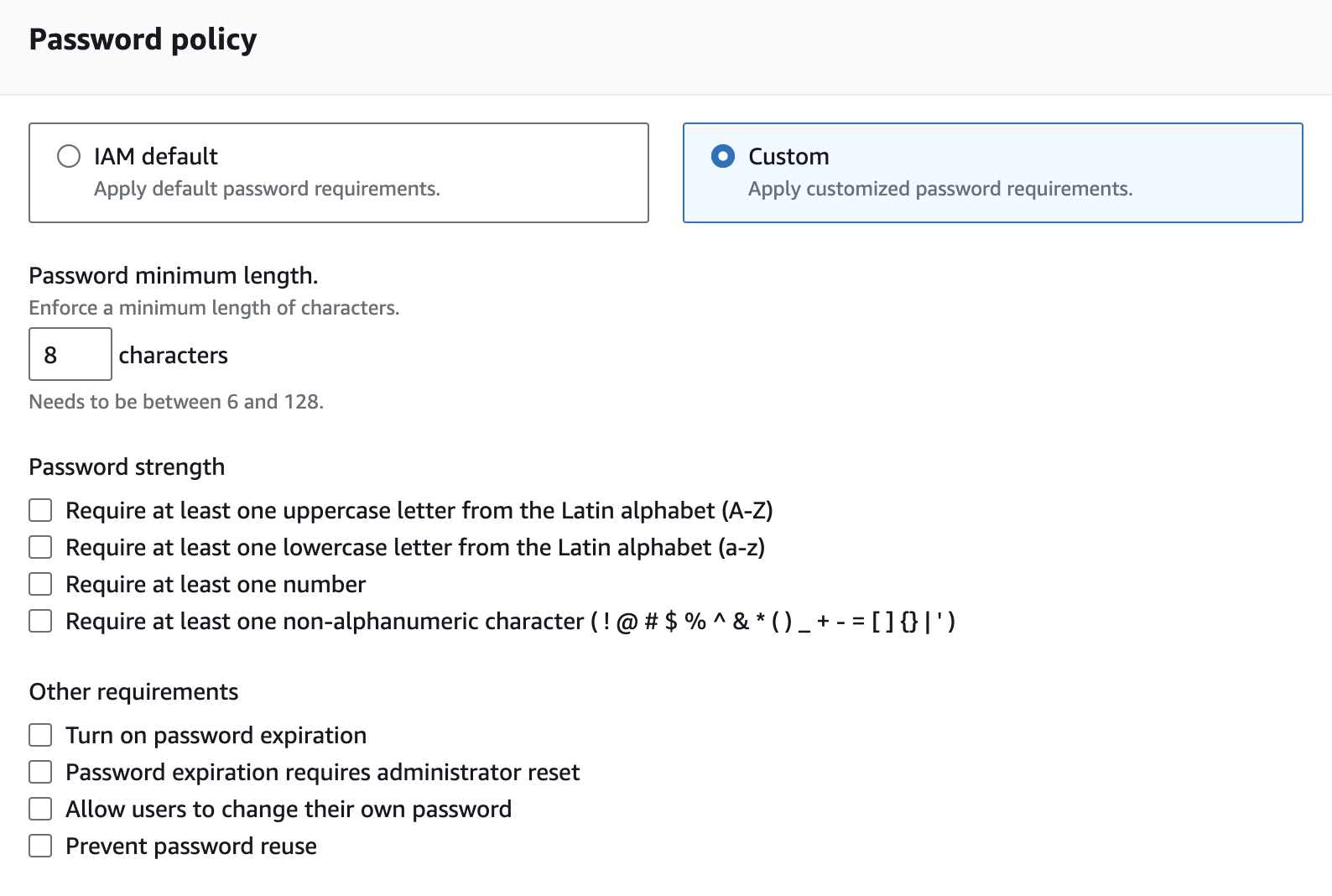

Enforce a Password Policy Requiring Users to Have Strong Passwords

Enforcing strong and long passwords for users shouldn't be a surprise to anyone, but this should still be on the checklist for every security team out there.

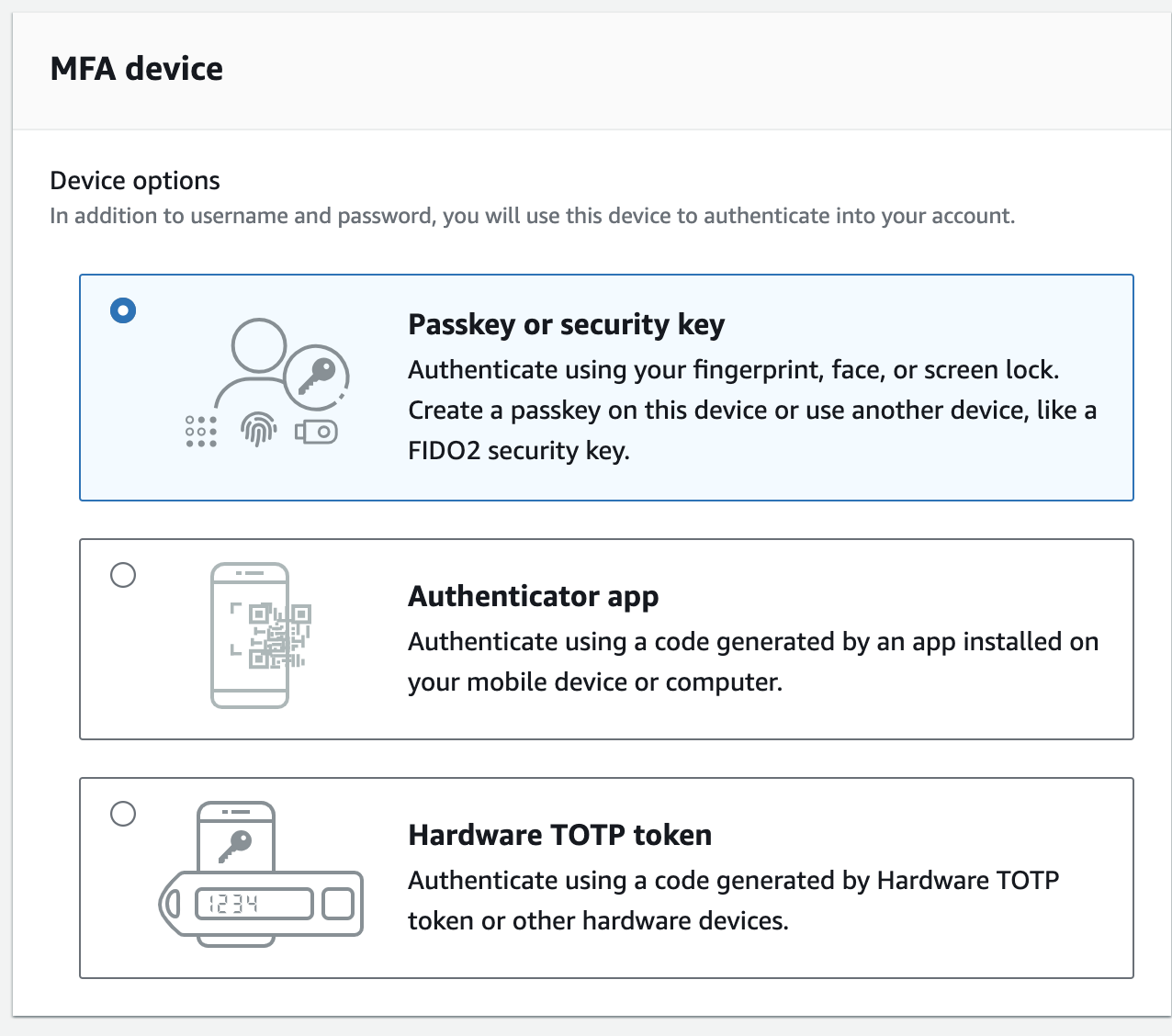

Enable Mandatory Multi-factor Authentication (MFA) for Users to Log In

MFA has been around for a while and should be enabled for any service or application that processes private data needing secure access. It adds an additional layer of security in the user authentication flow and has saved many headaches for sysadmins and security teams.

Rotate Old Credentials

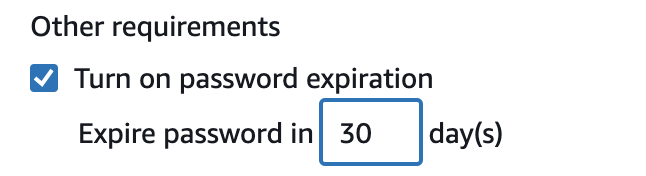

First of all, you shouldn’t use persistent AWS access keys at all. However, if there is a legacy use case where you can’t use temporary credentials, ensure that you rotate these frequently. This applies to user passwords as well, where you should enforce regular changes through password policy rules.

Use Temporary Credentials to Grant Access to AWS accounts

AWS best practice is to use temporary credentials to grant access to AWS accounts and resources. The main service that allows us to achieve this is AWS Security Token Service (AWS STS). This service works nicely together with many other AWS services like:

- Amazon Cognito Identity Pool. Generates temporary AWS credentials for users of your application.

- Amazon EC2. When you assign an IAM role to an EC2 instance, the instance gets temporary credentials from STS.

- AWS Lambda. When you assign an IAM role to a Lambda function, the function uses STS to get temporary credentials to access other AWS services.

It’s possible to use federated identities from a centralized identity provider (such as AWS IAM Identity Center, Okta, Active Directory etc.) to authenticate users to access AWS accounts and resources.

Restrict the Use of the Root User

It’s no secret that root user due to the over-permissive privileges shouldn’t be used for any daily tasks except few billing or account management ones.

Set Valid Email Addresses as Contacts to Receive Communication From AWS

By setting valid emails as contacts you will ensure that important AWS billing, operations, and security related notifications will find their way to your inbox and you will be able to properly react on these. These notifications have helped me a lot to be pro-active and fix anything that need to be fixed before it’s too late.

By setting valid emails as contacts you will ensure that important AWS billing, operations, and security related notifications will find their way to your inbox and you will be able to properly react on these. These notifications have helped me a lot to be pro-active and fix anything that need to be fixed before it’s too late.

Create Least-Privilege IAM Policies

This might sound like old news, but I bet if you were to go to the IAM policies section now and check your custom policies, you would definitely find overly permissive IAM policies. There could be many reasons for this, but the most common one is that we tend to be lazy, and it's time-consuming to define specific policies for different computing tasks. In an ideal world, each of our Lambda functions, EC2 instances, ECS tasks, EKS pods, etc., should have only the IAM permissions needed to fulfill a specific use case or task.

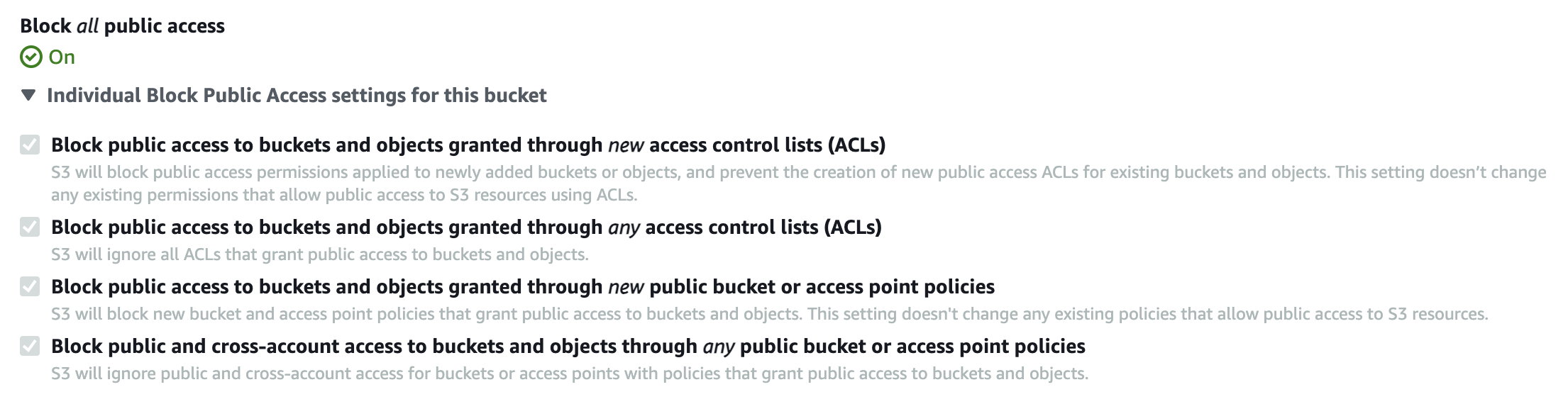

Prevent Public Access to Private S3 Buckets

This control mostly applies for old S3 buckets created before April 2023 because all the newly created S3 buckets now by default are private ones. But double-check this just in case!

This control mostly applies for old S3 buckets created before April 2023 because all the newly created S3 buckets now by default are private ones. But double-check this just in case!

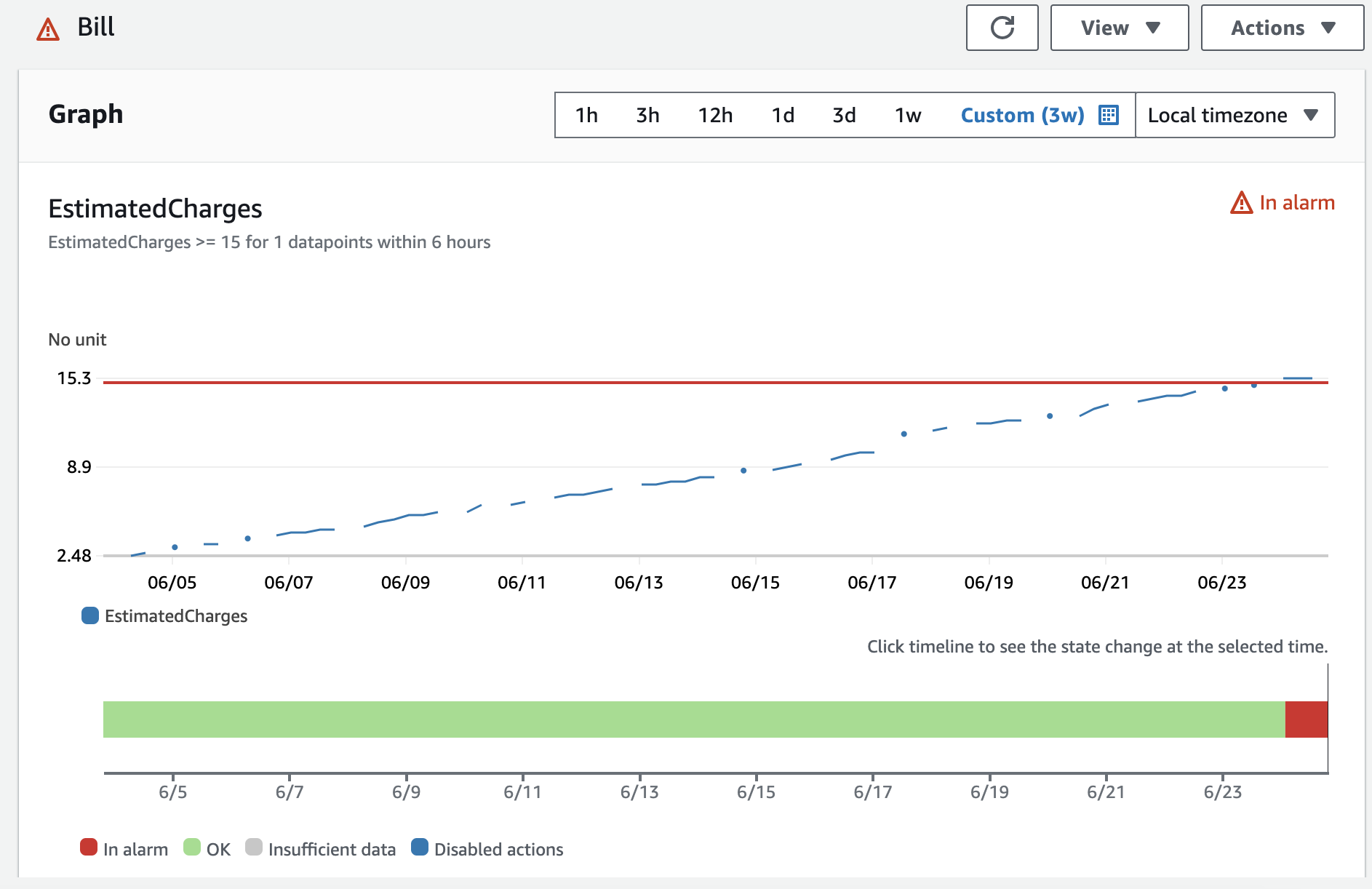

Create Billing Alarms

You should always monitor your AWS costs – even if you have “unlimited budget”. The reason is simple – when the AWS costs are higher than expected there most likely will be increased AWS usage. We should always investigate what happened and why to ensure that our services are secured and operational and no one is attempting to compromise them.

Whether you create a simple billing alarm or set up a cost budget with various notifications, the most important thing is that you are monitoring this.

Delete Unused VPCs, Subnets, and Security Groups

In every AWS region, a default VPC is created along with subnets, default security groups, etc. If you do not use the default VPC (for example, if you have serverless-only workloads or use your own custom VPC), you should delete it from all regions where it is unnecessary. This ensures that no one on your team will, for example, “accidentally” create an EC2 instance using default security groups, potentially exposing resources unintentionally.

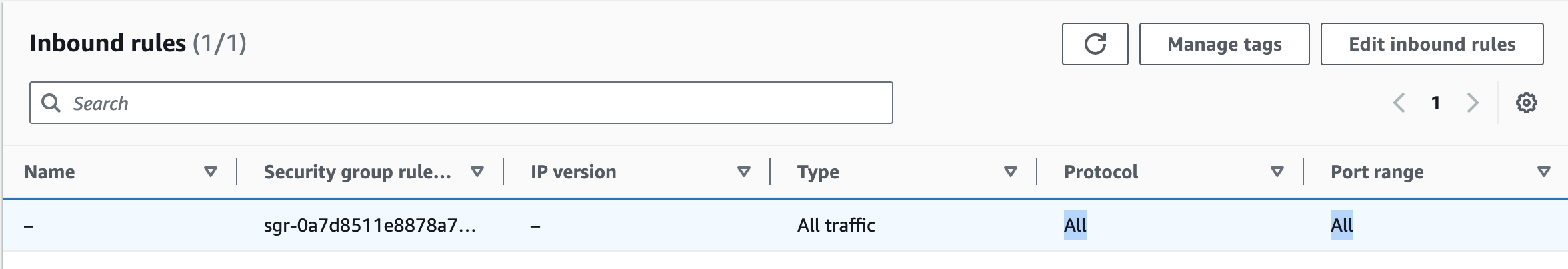

Configure Stricter VPC Security Groups for Both Public and Private Traffic

This is a common challenge that applies to everyone and can be compared to overly permissive IAM policies. The problem here is that many teams do really good job by configuring security group rules to limit public inbound traffic but then once the traffic is inside the VPC the “strictness” somehow vanishes and you start to create more open rules for private traffic. However, the principle remains that we should always allow only the necessary traffic for a specific use case or task, whether it's public or private.

This is a common challenge that applies to everyone and can be compared to overly permissive IAM policies. The problem here is that many teams do really good job by configuring security group rules to limit public inbound traffic but then once the traffic is inside the VPC the “strictness” somehow vanishes and you start to create more open rules for private traffic. However, the principle remains that we should always allow only the necessary traffic for a specific use case or task, whether it's public or private.

Utilize AWS Native Security Services

Continuously detect and respond to security risks by using AWS native services. There are several useful AWS services to support this like:

- AWS Config to assess, audit, and evaluate configurations of your resources

- AWS Security Hub to automate AWS security checks and centralize security alerts

- AWS CloudTrail to track user activity and AWS API usage

- Amazon Detective to analyze and visualize security data to investigate potential security issues

I believe that less is more, and I wouldn’t suggest that you now should go and enable all of these services believing that it will make your AWS account secure. What I would suggest that you evaluate the usage of each service by enabling these one by one and really understand the outcome and security benefits of using these. For example, there is no real added value if you simply set the AWS config rules or enable security checks in AWS Security Hub but you don’t react to any of the findings. Therefore, start it simple and improve your security posture by making step by step.

Additional Ways to Improve Security of Your AWS Account

In the previous section, I described the foundational AWS security controls that I strongly suggest enabling for any AWS account. Now, let’s explore some other ways to improve the security of your AWS account that can be optionally applied based on the size of your organization or use case.

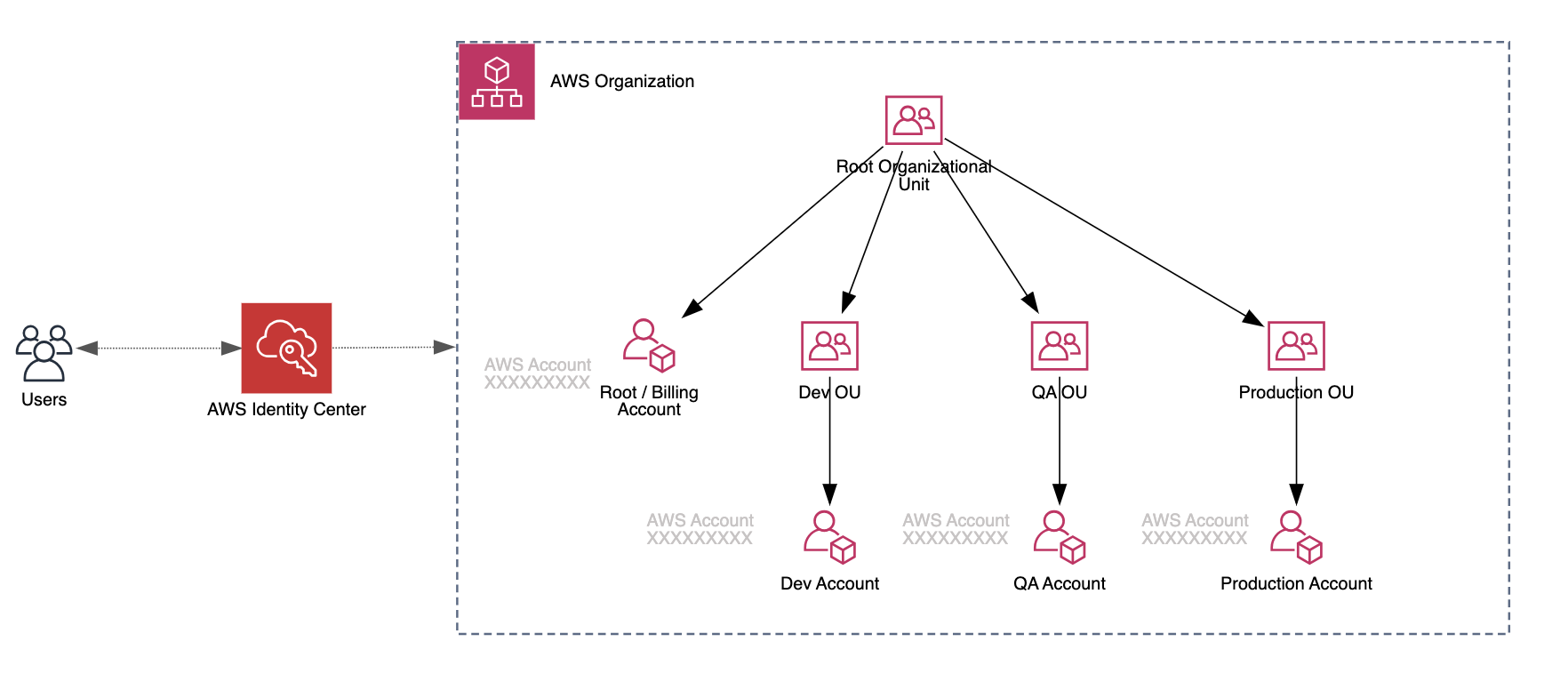

Start Using AWS IAM Identity Center and AWS Organizations

For anyone, from solopreneurs to small and medium-sized companies, with multiple AWS accounts, I suggest using the following (free of charge) AWS services for user and AWS account management:

- AWS IAM Identity Center for managing user access to AWS accounts and resources (ex. via AWS Console, CLI) instead of creating users directly in AWS IAM. This ensures that you have one place where you control user access for all AWS accounts.

- AWS Organizations for creating new AWS accounts and managing existing ones which nicely integrates with AWS IAM Identity Center where you can assign users to specific accounts and user groups.

Limit IAM Permissions for Development Users

Whether you are part of a large organization or a solopreneur, chances are that your development user / role has a lot more access rights than it needs to deploy your services to the AWS cloud.

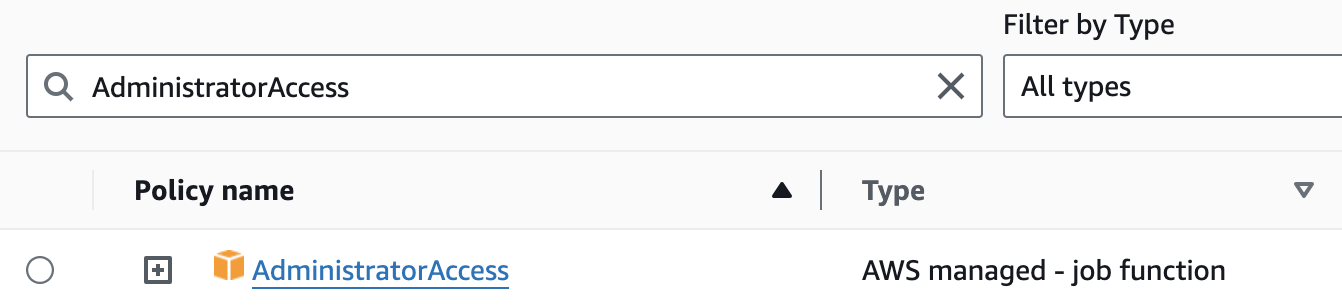

I have seen this quite often, even in large organizations with dedicated security teams—development users (and sometimes even production ones too) have the AWS managed policy AdministratorAccess assigned to them.

I totally understand this because creating a custom fine-grained IAM policy can take a lot of effort and iterations—especially if your team is just starting to build the product (e.g., exploring different AWS services to choose the right AWS architecture, etc.). While for some use cases you can initially use AdministratorAccess to kick-start new product development, in general, you should at least limit access at the AWS service level. For example, allow administrator access only for the AWS services that you plan to use or are currently using in your product or service.

Configure the AWS CLI to Use IAM Identity Center Credentials

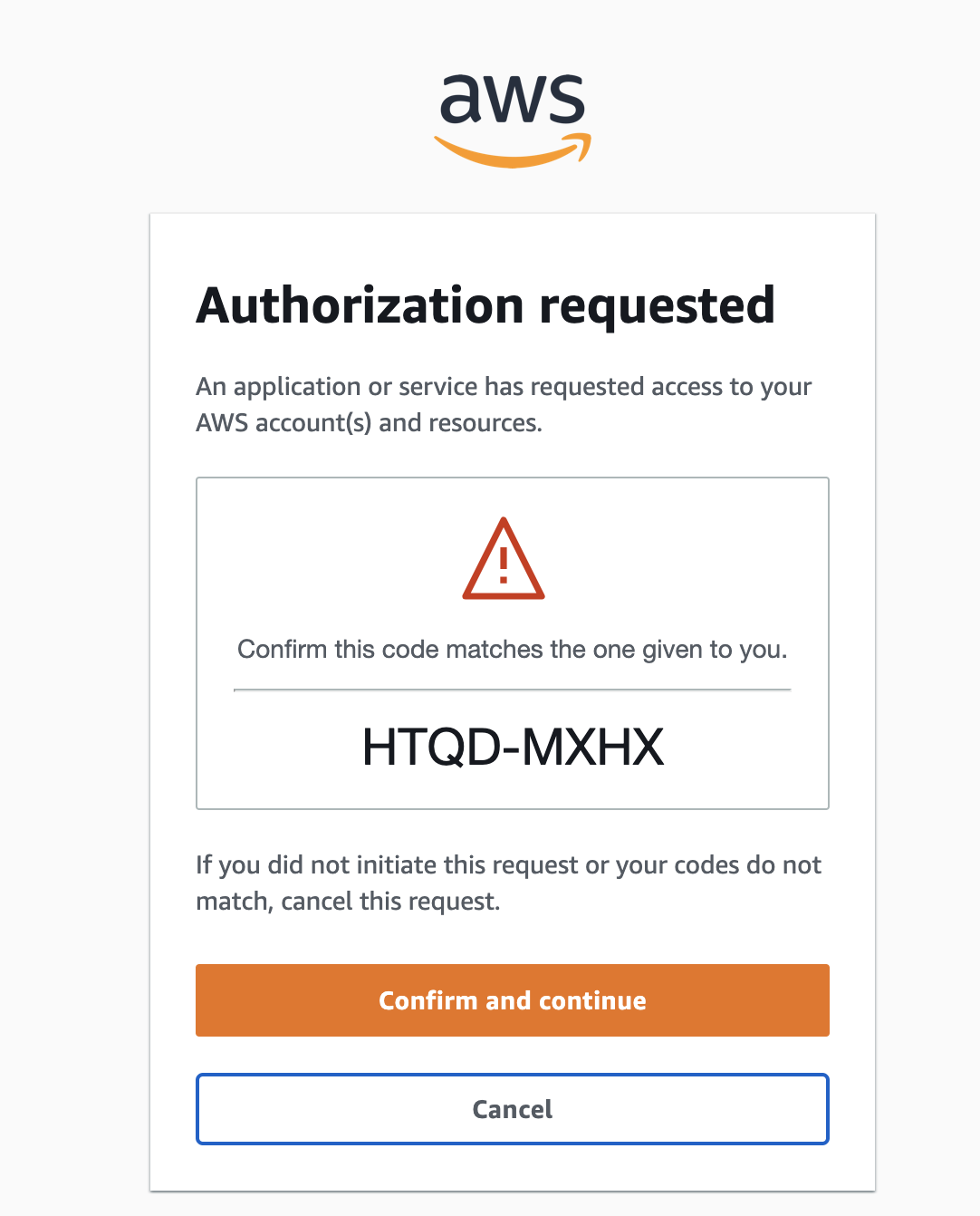

As I mentioned previously, you should avoid using persistent AWS access keys for accessing your AWS accounts or services and instead use temporary credentials. Now, let’s look at one of the ways we can accomplish that—by using AWS IAM Identity Center and AWS CLI (v2).

To configure your AWS CLI to use AWS IAM Identity Center token provider to retrieve refreshed authentication tokens you have to run following command and provide specific input parameters:

aws configure sso

SSO session name (Recommended): your-sso-session

SSO start URL [None]: https://<my-sso-portal>.awsapps.com/start

SSO region [None]: eu-west-1

SSO registration scopes [None]: sso:account:access

The default browser will be opened to start auth process for your AWS IAM Identity Center user.

After a successful login, you will see the list of available AWS accounts for this specific user, which you can then set up to access via AWS CLI. For example, if we have configured an account with the name “dev,” to list all the S3 buckets in that account, we would use the following command: aws s3 ls --profile dev.

To refresh the access token (after it has expired), run the following command: aws sso login --sso-session your-sso-session.

Always Verify Default Settings Set by Your IaC Framework

Today, nearly any new product or feature is delivered (deployed) using one of the IaC tools like the Serverless Framework, CDK, Terraform etc. All of these tools provide different component-based functionalities with preset default settings to deploy your services to the AWS cloud. For example, to deploy a custom VPC using CDK, you just use the following code:

import * as cdk from '@aws-cdk/core';

import * as ec2 from '@aws-cdk/aws-ec2';

export class MyStack extends cdk.Stack {

constructor(scope: cdk.Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

const vpc = new ec2.Vpc(this, 'MyVPC');

}

}

As you can see, we only specified new ec2.Vpc(this, 'MyVPC') to create a custom VPC, but under the hood, a lot more resources are being deployed. The default configuration creates one public, one private, and one isolated subnet in each Availability Zone. The public subnets have a route to an Internet Gateway, the private subnets have a route to a NAT Gateway (with one being created per AZ), and the isolated subnets have no routes outside the VPC.

While this gives us the ability to speed up our development process by quickly deploying different AWS resources, it can also introduce another “unknown” weak spot in our AWS cloud architecture. I strongly suggest that everyone takes the time to understand what is actually being deployed and with what settings in the AWS cloud. To better understand your AWS cloud architecture, you can use tools that automatically visualize it (for example, our own product, Cloudviz.io).

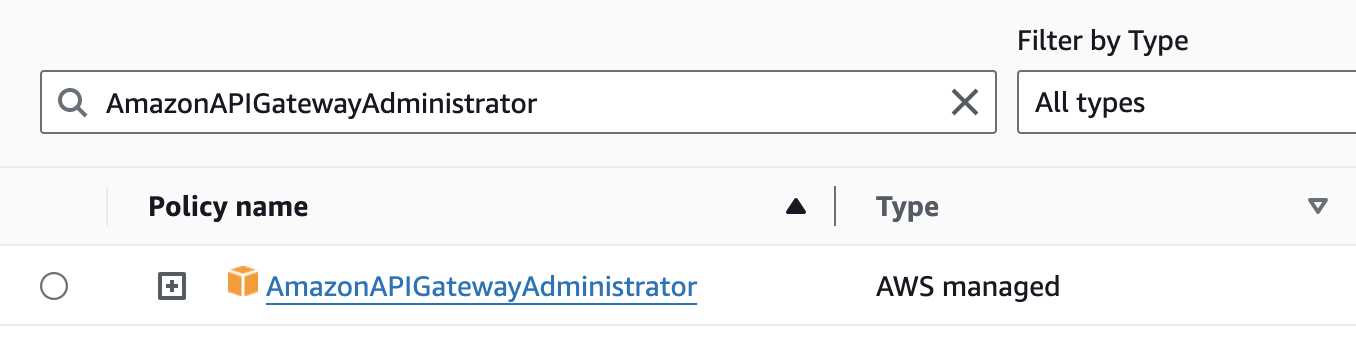

Limit IAM Permissions for IaC Deployment Roles

In the same way you should limit AWS IAM permissions for your development users, the same applies to IaC deployment roles and their policies used in your CI/CD workflows. The only difference, compared to development access policies, is that when deploying products and new functionality to the AWS cloud using CI/CD workflows, you should already know what AWS services and permissions are being used in the deployment. This allows you to create more detailed, least-privilege deployment IAM policies.

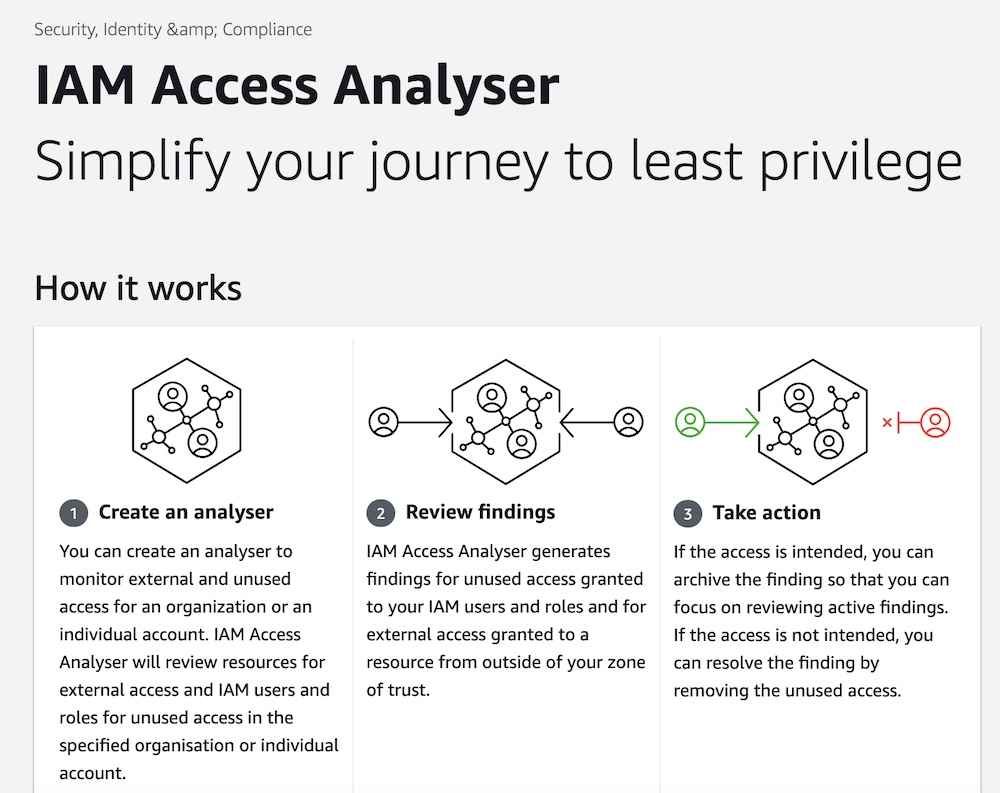

To analyze which permissions are being used or unused for your deployment IAM role, you can use IAM Access Analyzer.

Avoid Overly Descriptive Default API Gateway Response Messages

The suggestion to avoid detailed error messages in your API endpoints is often overlooked. When you use API Gateway, there are basically two main categories of error messages:

- Application-level errors: The ones that you send as a response if something fails in your application and then front-end reacts on that specific message.

- Gateway-level errors: The ones that are returned by API Gateway because the request didn’t even reach your application.

The first category is the one that you usually control with your code, allowing you to set specific messages based on the needs of your front-end UI requirements. The second category (API Gateway default responses) is often ignored by many developers. This is mainly due to the assumption that, as these are default error responses, they should be generic and not contain too many details about why the request failed. However, this may not be the case in some scenarios, such as when you use IAM authentication to authorize your API requests.

For example, if your IAM access credentials only allow you to access the GET /users API, but you try to send a POST /users API request, you will receive this error message:

{

"message": "User: arn:aws:iam::123456789012:role/your-authorized-role is not authorized to perform: execute-api:Invoke on the resource: arn:aws:execute-api:eu-west-1:123456789012:123456/prod/POST/users with an explicit deny"

}

As you can see, this error message contains several data points such as the AWS account ID, AWS role ARN, and API ID. While these are not really sensitive details and can be retrieved using the aws sts get-caller-identity call, the idea here is to show you the importance of double-checking your default API Gateway responses. Most likely, your application will work just fine with a generic 403 error message, and you don’t need to be overly descriptive about what exactly failed, as for example in the case of these response types:

INVALID_SIGNATURE:WAF_FILTERED:

{

"message": "The request signature we calculated does not match the signature you provided. Check your AWS Secret Access Key and signing method. Consult the service documentation for details."

}

{

"message": "Your request has been blocked by AWS WAF."

}

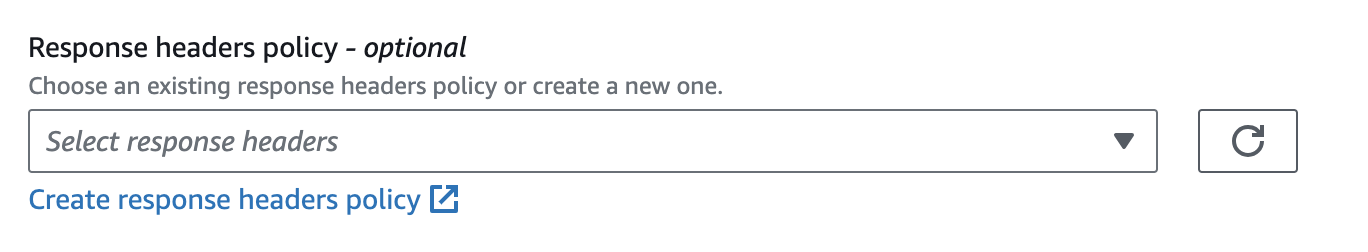

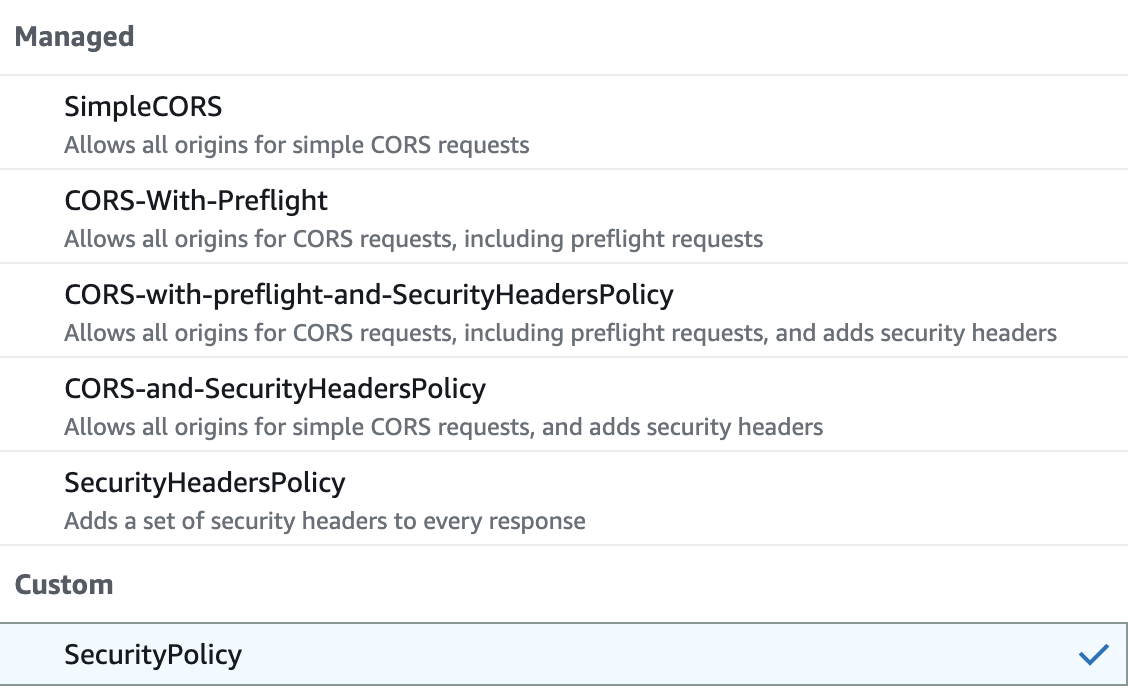

Use Security Response Headers for Your CloudFront Distribution

If you are using Amazon CloudFront as your content delivery network to distribute your web application static content like css, js, image files etc. (for example, stored in S3 bucket), chances are that your distribution behaviors don’t have any response headers policy set.

As this is optional and doesn’t fall under CloudFront distribution default settings this is not set when deploying it by using most of the IaC tools. I would suggest to review your web applications use case because there is solid change you would want to set the security response headers to protect you from certain types of attacks and vulnerabilities. List of security response headers includes:

- Strict-Transport-Security: This header is used to enforce secure (HTTP over SSL/TLS) connections to the server.

- X-Content-Type-Options: This header prevents the browser from interpreting files as a different MIME type to what is specified in the Content-Type HTTP header.

- X-Frame-Options: This header can be used to indicate whether a browser should be allowed to render a page within a frame or an iframe.

- X-XSS-Protection: This header can be used to configure the XSS protection mechanism built into most web browsers.

- Content Security Policy (CSP): The Content-Security-Policy header helps to prevent a wide range of attacks, including XSS and data injection attacks. It allows you to specify the domains that the browser should consider to be valid sources of executable scripts.

You can use either AWS managed SecurityHeadersPolicy or create your own custom security policy with your own values.

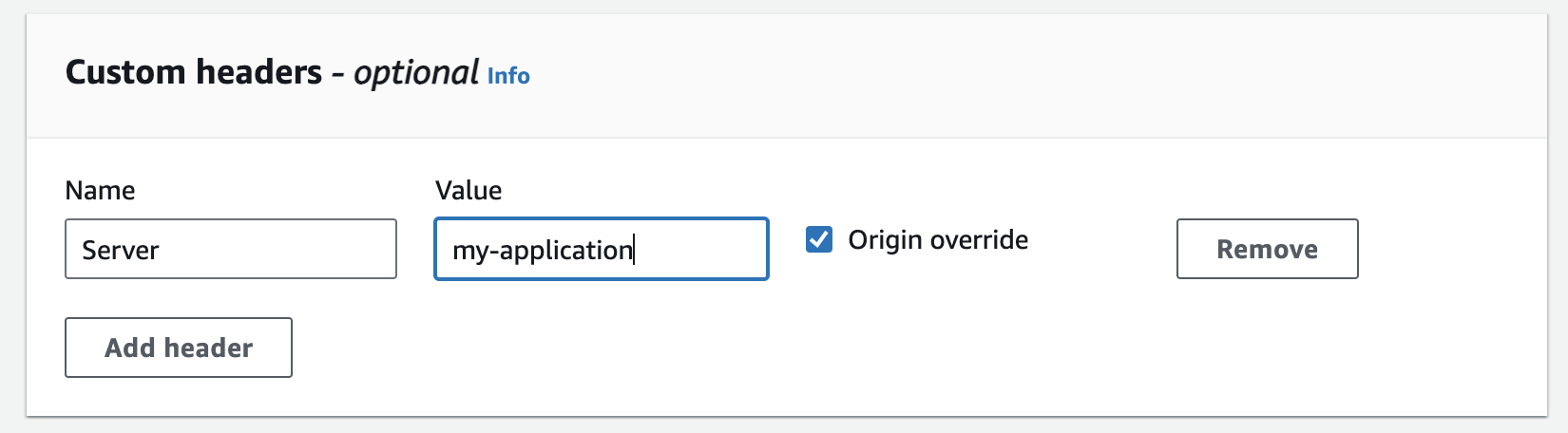

Override the “Server” Response Header for Your CloudFront Distribution

We should always try to limit exposure of information about our back-end services. It is a good security practice not to expose any specific server details. If you use CloudFront as your CDN, please check your response headers for the name “Server”. Chances are that this header will contain some details about your origin service/server. If CloudFront forwards the request to the origin server (for example, an Amazon S3 bucket, an Amazon EC2 instance, or another web server) and the origin server includes a "Server" header in its response, CloudFront will include this header in its response to the client.

You can’t remove this header in CloudFront, but you can override it with a default value, such as your application name, which doesn’t expose any additional details about your back-end services. To do that, add a custom header using a response header policy and select Origin override.

Summary

Improving the security of your AWS account is a continuous journey. You will never reach a point where you can say that your AWS account and its running services are 100% secure. No one is safe from possible cyberattacks; even large organizations that spend tons of money on their security operations have weaknesses. But what we can all do is limit potential security issues as much as possible by starting with foundational AWS security controls and ensuring that these are enforced. Once these are in place, we can start to look at each AWS service we use (for example, API Gateway, CloudFront, IAM, etc.) to run our products and apply more detailed security controls, some of which are described in the additional security controls section. Let’s make our AWS environments more secure!

Tired of browsing through the AWS console?

Try out Cloudviz.io and visualize your AWS cloud environment in seconds

As experienced AWS architects and developers, our mission is to provide users an easy way to generate stunning AWS architecture diagrams and detailed technical documentation. Join us to simplify your diagramming process and unleash the beauty of your cloud infrastructure

Support

Contact

Copyright © 2019 - 2025 Cloudviz Solutions SIA